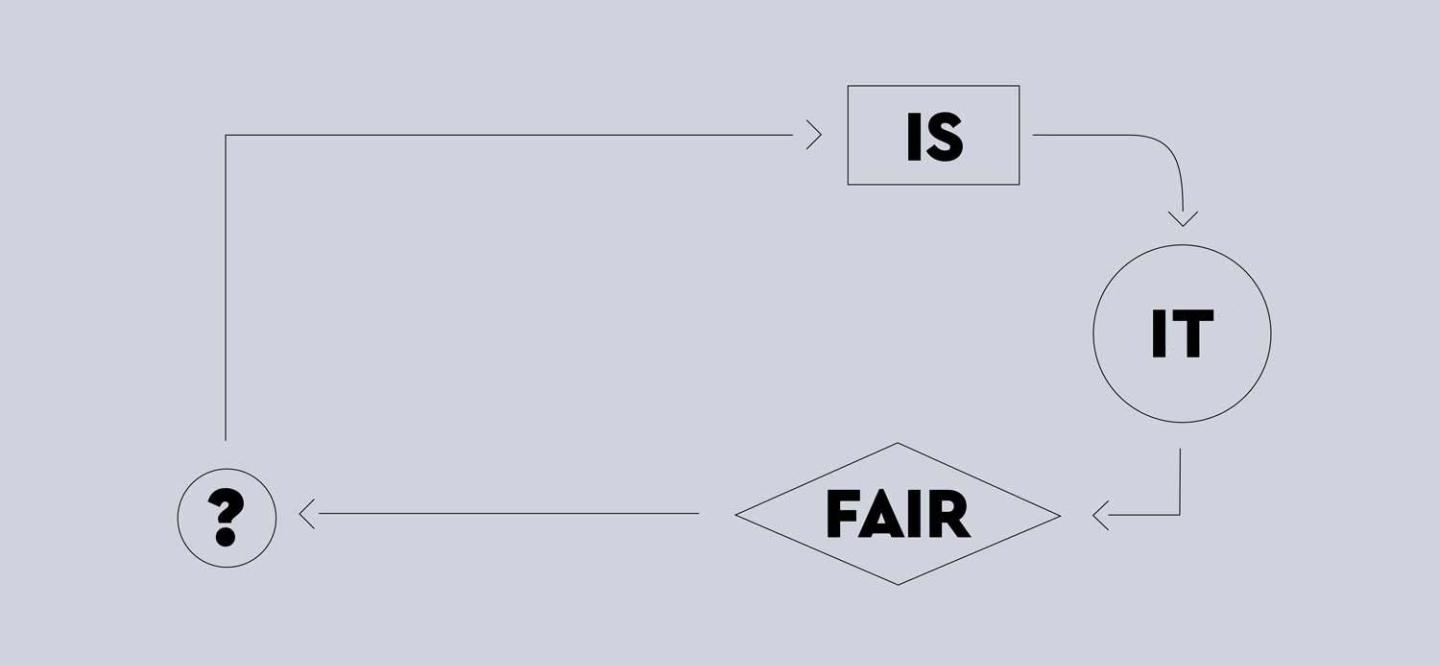

Is it fair? Algorithms and asylum seekers

#Artificial Intelligence

Europe is experimenting with the application of automated decision-making to manage asylum and migration processes. Researchers in an international project are investigating whether and how the use of algorithms is able to contribute to fairness.

The war in Ukraine is turning millions of people into refugees. Berlin's main train station was and still is an important stop for many of them: from here, they continue their journey by train, take a plane or stay in the city. The fact that Ukrainian refugees can enter the EU without visas and decide for themselves where to settle is an exceptional case, emphasizes Cathryn Costello, Professor of Fundamental Rights at the Hertie School in Berlin and Co-Director of the Hertie School’s Centre for Fundamental Rights. Other refugees seeking protection in the EU as asylum seekers usually need a visa to enter the Schengen Area, which they are rarely able to obtain.

In many European countries, new technologies for managing asylum seekers and migrants are under discussion – and in some cases already in use. An international group of researchers wants to investigate how algorithms are being used to realize automated decision-making in the case of asylum and migration and what the consequences are. Their project bears the title "Algorithmic Fairness for Asylum-Seekers and Refugees" and focuses on the question of how this special form of automated decision-making can contribute to fairness. The study involves legal, social and data scholars from the Hertie School, the European University Institute, Florence, and the universities of Copenhagen, Zagreb and Oxford.

Fairness as an ethical standard

Fairness is their central term. "We don't start with a fixed understanding of the term; we want to work it out as we go along," explains Cathryn Costello. "Notwithstanding, the term provides us with an ethical standard with which to examine and normatively evaluate the practices involved." Unlike in criminal law, where there are common notions of fairness across different legal systems, what is meant by a fair visa system is nowhere precisely defined for the field of asylum and migration, she says.

So far, there has been very little research on the use of algorithms in the context of asylum and migration, the principal investigator notes. "Our goal is to explore the potential consequences before their use becomes accepted as commonplace." As an initial evaluation revealed, "There are some controversial applications." In the U.K., for example, an algorithm was used that resulted in discriminatory visa allocations tied to certain nationalities. The Joint Council for the Welfare of Immigrants and Foxglove, two civil society organizations, were alerted and succeeded in stopping its application.

Photography project "Arriving": Malte Uchtmann's project is also about how society deals with refugees. The Hanover-based photographer focuses on the architectural infrastructure of the accommodation provided by the public sector. He notes, "Through architecture, a society communicates its values. Even though Germany has become known for its 'welcome culture', we both consciously and unconsciously create material and immaterial borders that make it difficult for refugees to settle down."

There are some controversial applications.

Project partner Dr. Derya Ozkul of the Refugee Studies Centre an der Oxford University explains in more detail where and how algorithms are already being tested or applied: for example, to divide visa applications into simple and complicated categories or to screen asylum applicants for credibility. "They can be used to identify and assess the dialect an applicant speaks. For example, in the case of Syrian applicants, it can be used to check whether they actually speak the Arabic dialect that is common in Syria."

Technological applications such as dialect recognition and name transcription are already being implemented by the Federal Office for Migration and Refugees in Germany (BAMF). And other countries, such as the Netherlands, are following suit and testing applications that check the language of applicants. In Turkey, a test was recently carried out to check the origin of Uyghur-speaking applicants.

Who decides?

Regardless of what the software suggests, it is still government officials who ultimately make the assessment, the BAMF stressed. "But we researchers have to investigate the extent to which the responsible officials actually do make the decisions or are simply agreeing to what the software suggests," says Ozkul, a sociologist and migration researcher.

Costello and Ozkul have both done work on various refugee recognition practices and on identification and border control technologies. Derya Ozkul, for example, studied the registration practices of the UN Refugee Agency and the collection of biometric data from refugees in Lebanon. "In Jordan, iris recognition is even used on shoppers in the Zaatari refugee camp," says Cathryn Costello.

"Arriving" - photograpy project by Malte Uchtmann

To better understand fairness in ethical and legal terms, the international project team is looking at three dimensions. On the one hand, the researchers are concerned with individual fairness, which can be seen in the way an asylum seeker is interviewed and questioned about his or her ideas. The second dimension relates to the fair distribution of migrant men, women and children – and thus to the fair sharing of responsibility and burdens among host countries and municipalities.

What is the public perception?

The third perspective encompasses both the individual as well as the public perception of fairness. How do the asylum seekers and refugees themselves perceive the application process? Do they know whether an algorithm was used and what effect it had? Derya Ozkul will explore these questions in qualitative interviews with those affected. The project members also want to investigate the public perception with the help of a broad survey in eight EU countries.

Newtech, or emerging technologies, are often opaque and difficult to explain

Not only is it hard for laypeople to understand how algorithm-based decision-making works, the underlying data and criteria are also anything but transparent. "Newtech, or emerging technologies, are often opaque and difficult to explain," says Cathryn Costello. Whether this leads to a loss of trust, however, depends on the framing, she says. For example, a facial scan is seen as a means of mass surveillance and tends to be viewed critically. In contrast, technologies that identify risks posed by individuals meet with greater public approval.

Pilot projects are also using algorithms to try to align the interests of applicants with those of the host locations. "The algorithms sort refugees by skills and marital status with the goal of increasing their chance of finding work," says Cathryn Costello. However, the asylum seekers themselves are not asked where they would like to live, even though the technology would easily allow a matching of preferences.

Costello, a lawyer, sees the benefits of using algorithms this way: "For complex issues, they help to reach decisions faster and more efficiently." If the data used and criteria are transparent, it could even reveal biases that influence normal visa allocation processes. An algorithm should be designed and applied to give refugees a wide range of choices. This would be in line with the prevailing perceptions of fairness in our society and a free life that is as self-determined as possible, Costello and Ozkul emphasize.

The contribution of science

The two researchers are convinced that science itself can contribute to more fairness in refugee and migration processes. They have both taught at the University of Oxford, where the world's first Refugees Studies Centre was founded in 1982. "Here, there is a long tradition of ethical reflection about the obligations and duties of scholars," says Cathryn Costello. "For example, whether there's an obligation to do more than seek the truth and to counteract injustice as well."

To prevent discriminatory practices in the future, we also need to make the results as widely accessible as possible.

Costello herself sees her primary responsibility as conducting nonjudgmental research and contributing new knowledge. But of course, she also believes researchers have an obligation to the "research subjects" because, "We're working with people's stories, life experiences and data, after all." And what to do when you encounter injustice? As a researcher, Derya Ozkul can't solve the immediate problems of refugees, she says, "But we can publicize the injustices and discriminatory practices we encounter through our research."

According to this understanding, asylum and migration research could also assume a mediating position between people seeking protection and civil society organizations. "In this project, it will not be enough just to publish scientific findings," Ozkul is convinced. "To prevent discriminatory practices in the future, we also need to make the results as widely accessible as possible."

The refugees from Ukraine will put Europe's approach to migration and asylum to the test, Cathryn Costello emphasizes, and this will also give rise to new perspectives: "We can now observe whether it really is possible for people to simply move to where they want to live."

Fotoprojekt "Arriving" von Malte Uchtmann.